An interesting article.... essentially about wine but it does cast its net wider. It is somewhat disingenuous in parts.... a mention that a lot of people watch standard definition programmes on high definition TVs is true... but no mention that those TVs do upscale so there is a real difference and most of the "tests" mentioned in the early part of the article were designed to fool people.

http://lifehacker.com/5990737/why-we-ca ... e-from-bad

Blind tests....

Blind tests....

Vinyl - anything else is data storage.

Thorens TD124 Mk1

Kuzma Stogi 12"arm

HANA Red

GoldNote PH10 + PSU

ADI-2 DAC

Lector CDP7 via Kimber KS into Wyred4Sound pre

Airtight ATM1s

Klipsch Heresy IV

Ansuz P2 Speaker cable

Misc Mains RCA + XLR ICs

Thorens TD124 Mk1

Kuzma Stogi 12"arm

HANA Red

GoldNote PH10 + PSU

ADI-2 DAC

Lector CDP7 via Kimber KS into Wyred4Sound pre

Airtight ATM1s

Klipsch Heresy IV

Ansuz P2 Speaker cable

Misc Mains RCA + XLR ICs

-

Alltheyoungdudes

- Posts: 87

- Joined: Mon Jan 03, 2011 12:25 am

Re: Blind tests....

Very interesting - and a lot of truth in that. I remember reading about a similar experiment where a group of people were given beer to drink and were then tested on their driving skills - after one, two, three, and four drinks etc. Needless to say, their driving skills deteriorated with each subsequent drink. But half the group were (unknowingly) drinking alcohol-free beer! Yet they displayed the same intoxication effects as the other half, who were drinking "real" beer with real alcohol.

Of course, when it comes to audio equipment - the more expensive - the better it sounds!

Of course, when it comes to audio equipment - the more expensive - the better it sounds!

Re: Blind tests....

These articles invariably annoy the crap out of me. This one was no different!

Nerdcave: ...is no more!

Sitting Room: Wadia 581SE - Rega Planar 3/AT VM95ML & SH - Bluesound Node II - Copland CSA 100 - Audioplan Kontrast 3

Kitchen: WiiM Pro - Wadia 151 - B&W 685s2

Sitting Room: Wadia 581SE - Rega Planar 3/AT VM95ML & SH - Bluesound Node II - Copland CSA 100 - Audioplan Kontrast 3

Kitchen: WiiM Pro - Wadia 151 - B&W 685s2

Re: Blind tests....

+1 Not sure if it is because I don't understand them or it is all too much of hassleDiapason wrote:These articles invariably annoy the crap out of me. This one was no different!

GroupBuySD DAC/First Watt AlephJ/NigeAmp/Audio PC's/Lampi L4.5 Dac/ Groupbuy AD1862 DHT Dac /Quad ESL63's.Tannoy Legacy Cheviots.

Re: Blind tests....

The same applies when judging Hifi equipment in a group vs. alone. That's why listening on your own is paramount when picking new gear... ;)

Re: Blind tests....

Yea, I remember one from not so long ago with headline news along the lines of Stradivarius can't be told apart from modern violins.

They gathered about 6 professional violinists, brought them to a hotel room, put goggles on them & some scent on the violins to disguise any telltale smells & guess what they couldn't tell the violins apart :).I doubt they would be able to tell the sound of their own mother under these circumstances, with their sense being assaulted so.

BTW, I put the cat among the pigeons on another favourite forum by reporting successful ABX blind tests of high-res Vs CD audio. I've always used science as the counter-argument to people that pretend science is on their side & cite null tests as evidence of "all X sound the same". The sound of lab rats scurrying out of the lab was hilarious. The few that remained sacrificed themselves for science which was honorable of them :)

But some are so thick or so entrenched in their views that they have no possibility of ever understanding science 101, proper experimental design or statistical analysis (all of which are needed for a proper blind test) & yet these are usually the most vociferous ones.

The thing that should now be asked is: given that these ABX tests are proof that there is an audible difference in these particular files & these files have been tested for the last 10 years by many & null results returned (i.e no difference) what does that say about all these null results & what does it say about blind testing in general? Questions that don't want to be faced up to by the very same people that always challenged others to a blind test.

I see the article (which I only skimmed) mentions "the beast of expectation" but this is always mentioned in reference to how it leads us to hear things which "aren't there" because we expect to hear it (called a false positive in tests). It fails to mention the other side not hearing something which is known to actually exist (called a false negative). So a blind test is meant to eliminate false positives but it is never analysed just how prone it is to skew the results towards null results (false negatives).

They gathered about 6 professional violinists, brought them to a hotel room, put goggles on them & some scent on the violins to disguise any telltale smells & guess what they couldn't tell the violins apart :).I doubt they would be able to tell the sound of their own mother under these circumstances, with their sense being assaulted so.

BTW, I put the cat among the pigeons on another favourite forum by reporting successful ABX blind tests of high-res Vs CD audio. I've always used science as the counter-argument to people that pretend science is on their side & cite null tests as evidence of "all X sound the same". The sound of lab rats scurrying out of the lab was hilarious. The few that remained sacrificed themselves for science which was honorable of them :)

But some are so thick or so entrenched in their views that they have no possibility of ever understanding science 101, proper experimental design or statistical analysis (all of which are needed for a proper blind test) & yet these are usually the most vociferous ones.

The thing that should now be asked is: given that these ABX tests are proof that there is an audible difference in these particular files & these files have been tested for the last 10 years by many & null results returned (i.e no difference) what does that say about all these null results & what does it say about blind testing in general? Questions that don't want to be faced up to by the very same people that always challenged others to a blind test.

I see the article (which I only skimmed) mentions "the beast of expectation" but this is always mentioned in reference to how it leads us to hear things which "aren't there" because we expect to hear it (called a false positive in tests). It fails to mention the other side not hearing something which is known to actually exist (called a false negative). So a blind test is meant to eliminate false positives but it is never analysed just how prone it is to skew the results towards null results (false negatives).

www.Ciunas.biz

For Digital Audio playback that delivers WHERE the performers are on stage but more importantly WHY they are there.

For Digital Audio playback that delivers WHERE the performers are on stage but more importantly WHY they are there.

Re: Blind tests....

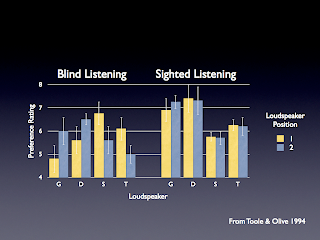

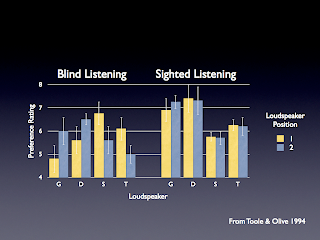

Here's a guy, Sean Olive, who is held up as the yardstick for objectivists & big supporter of blind testing. http://seanolive.blogspot.ie/2009/04/di ... oduct.html

Firstly, he works for Harmon speakers & many objectivists would normally discount anybody who works for an audio company but not in this case. Now if you look at his test there are some interesting background facts that you need to find out:

- he tests speakers but only one speaker, not a stereo pair as he claims that his tests in the past, have shown a direct correlation between the results for mono Vs stereo. Yea, he is ignoring sound stage, timing, & how many other aspects of our listening?

- if you look at that graph it actually demonstrates how the results dampen down differences noted when sighted. The best liked speaker is still the best liked speaker when blind but is further ahead of the pack when listening sighted than when blind.

- if you look at that graph it actually demonstrates how the results dampen down differences noted when sighted. The best liked speaker is still the best liked speaker when blind but is further ahead of the pack when listening sighted than when blind.

- some of the speakers tested were Harmon speakers & some from the competition & here's the really disingenuous bit - he used some Harmon employees in the tests. Now, do we expect Harmon employees are going to be unbiased in sighted tests when it's known what speakers are being used & the tester is from Harmon. It just might influence the results a wee bit :)

Firstly, he works for Harmon speakers & many objectivists would normally discount anybody who works for an audio company but not in this case. Now if you look at his test there are some interesting background facts that you need to find out:

- he tests speakers but only one speaker, not a stereo pair as he claims that his tests in the past, have shown a direct correlation between the results for mono Vs stereo. Yea, he is ignoring sound stage, timing, & how many other aspects of our listening?

- if you look at that graph it actually demonstrates how the results dampen down differences noted when sighted. The best liked speaker is still the best liked speaker when blind but is further ahead of the pack when listening sighted than when blind.

- if you look at that graph it actually demonstrates how the results dampen down differences noted when sighted. The best liked speaker is still the best liked speaker when blind but is further ahead of the pack when listening sighted than when blind.- some of the speakers tested were Harmon speakers & some from the competition & here's the really disingenuous bit - he used some Harmon employees in the tests. Now, do we expect Harmon employees are going to be unbiased in sighted tests when it's known what speakers are being used & the tester is from Harmon. It just might influence the results a wee bit :)

www.Ciunas.biz

For Digital Audio playback that delivers WHERE the performers are on stage but more importantly WHY they are there.

For Digital Audio playback that delivers WHERE the performers are on stage but more importantly WHY they are there.